If you have been reading this blog over the last several years, you know that I have been a strong supporter of using PowerPivot and the Tabular model to perform data analysis, even for power users, not just DBAs. What you may not have realized is that I’ve been secretly teaching you a little about data modeling. What is data modeling? It is really nothing more than the collection of all the data in tables and the relationships between those tables in a database. Did you know that there are at least two major ‘types’ of data models when it comes to how your structure your data into tables? Each one serves a different purpose. Therefore, it can reasonably be argued that neither one is more correct than the other. But you need to understand when to use each type. That is what I want to discuss today.

Most DBAs and developers who work with databases like SQL Server, Oracle, and many common database are comfortable with the relational model for creating tables and defining the relationships that connect them. In fact, they immediately start to normalize a denomalized database in their heads within seconds of seeing the data schema. The relational model relies on the application of the rules of data normalization introduced by Edgar F. Codd in 1970. In essence, the goal of the rules of data normalization is to minimize the data redundancy which also has the effect of decreasing the overall size of the database while at the same time making it easier to maintain information that would otherwise be repeated through many records.

There are three primary rules that are used to determine whether a database, a collection of tables, has been normalized. These rules are:

First Normal Form: No two rows of data may contain repeating data or groups of data. Such repeating data must be split into a separate but connected table. For example, a sales order may contain the purchase of one or more items. Because the number of items included in a sales order is not predefined, it must be split into a separate table with one row for each item on the sales order. These two tables are then typically connected by an order id.

Second Normal Form: This rule only applies to tables which have a compound primary index, an index built from two or more fields. In this rule, all other fields in the table must depend on the entire compound index value, not only a portion of it. A possible example of this might be a table that includes students at a school in which the primary index combined the school name (or id) along with the student name (or id). Imagine that the table also included the address and phone number of the school. This information is not depended on the combination of the school and the student. It only depends on the school. Therefore, this violation of the second normal form requires that the data related only to the school be split into a second table that includes only school information.

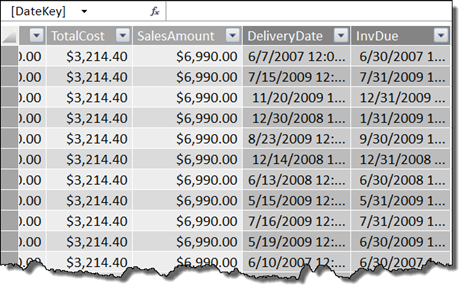

Third Normal Form: This rule requires that every field not part of the primary index be depended on the primary index. Going back to my favorite Contoso database, one could argue that in the FactSales table, the Sales Amount field is redundant since this table also includes the sales quantity, sales price, and any sales discounts or returns. Why is it redundant? Because it can be calculated based on the other values of other columns in the record. Therefore, to fully achieve, third normal form, this field should be removed.

While there are other special case rules that can be applied to normalization of a database, most DBAs will be satisfied with a database that satisfies these three rules. They will then build the tables in their database corresponding to these rules and thus create the physical data model. It is called the physical data model because it defines the physical schema of the tables and the relationships between them.

However, business users of the data don’t look at the database that way. In fact most business users would be baffled by the large number of tables required and the relationships between them. In fact, they will not understand why they need to combine data from a half dozen to a dozen tables just to answer a single query question. As an example, let’s look at how a business user might think of just the products within the Contoso database.

The figure below shows the normalized data model for products and their subcategories and categories. As you can see from the schema, the only important piece of information in the subcategories table is the name of the subcategory. Similarly, the only important piece of information in the categories table is the name of the category.

Most business users would not think of category and subcategory names as part of separate tables, but as attributes of the product itself. In fact they would think of product information more like the following figure.

Imagine a database model with dozens of normalized tables and then try to envision how the typical business user sees the data with perhaps only a half dozen tables after denormalization.

In PowerPivot, we can address this issue in one of two ways. The first way would be to add the category and subcategory names to the product table in SQL Server before loading the data into PowerPivot. This would essentially make the physical data model the same as the logical data model used by the end-users. However, this data model would no longer be normalized.

Physically denomalizing the data tables is not the only solution. I could, as shown before in https://sharepointmike.wordpress.com/2012/07/21/power-pivot-hierarchies-part-2-of-powerpivot-2012-series/, build two calculated fields in the product table that use the RELATED() function to get the category and subcategory names and display them in the product table. I could then hide the two tables, dimCategory and dimSubcategory, from the user so that they would see a structure similar to their expected denormalized logical data model even though the data still is physically stored in a normalized structure.

The advantage of making the changes to the data model outside of PowerPivot is that it effectively reduces the amount of data that PowerPivot must store in memory thus potentially allowing a larger model to be created. The advantage of making the changes inside PowerPivot by using the RELATED() function and then hiding the technical tables that are still needed in the model but do not need to be seen by the client is that it preserves the sanity of those DBAs who cringe every time someone tries to their denormalize their data. Both methods will allow me to create similar Pivot tables in my Excel spreadsheet. Thus both methods can be considered correct.

The thing to remember is this. Data Normalization was developed to make data storage and maintenance easier and more efficient. However, data analysis and reporting often requires a fair amount of data denormalization. This is especially true when performing data analysis using reports, pivot tables and charts, and even third party data analysis tools. In fact, the logical view of the data schema aids in the performance of most data analysis. Just remember that your logical model is not a normalized model.

C’ya next time.